I'm Max Desiatov, a Ukrainian software engineer.

I'm Max Desiatov, a Ukrainian software engineer.Introduction to structured concurrency in Swift: continuations, tasks, and cancellation

14 January, 2021This article is a part of my series about concurrency and asynchronous programming in Swift. The articles are independent, but after reading this one you might want to check out the rest of the series:

- How do closures and callbacks work? It’s turtles all the way down

- Event loops, building smooth UIs, and handling high server load

- Introduction to structured concurrency in Swift: continuations, tasks, and cancellation

IMPORTANT: This article is about an experimental feature, which is not available in a stable version of Swift as of January 2021. APIs and behaviors described in this article may change without notice as new development snapshots become available, but I will try to keep sample code updated as we get closer to general availability of structured concurrency in Swift.

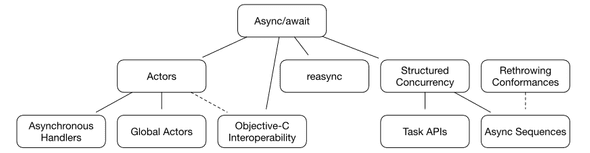

There are many discussions of the imminent introduction of async/await to Swift. The authors of

Swift concurrency proposals have done monumental work in preparing all of the related features. And

there are many of them. Just have a look at the actual “dependency tree” of the proposals:

Around the time async/await proposal was accepted, I saw people asking almost the same set of

questions: how to convert legacy APIs to async? How an entrypoint to async code would look like? While

examples of usage of async/await in UIKit apps were shared around, there were no good

examples that utilized the structured concurrency proposal. I wanted to have a look at some minimal

isolated code snippets that could help me answer these questions.

Setting up a SwiftPM project

Turns out, it’s relatively easy to set up a CLI project with SwiftPM that uses async/await.

Please feel free to clone the final result from GitHub

to follow along. The README.md file in the repository also contains important installation steps, as the

standard toolchain supplied in the latest stable Xcode currently won’t work. You’ll have to install

a development toolchain as described in the document to run this example code on your own machine.

In the rest of the article I’m going to review this example project piece by piece.

Compiler flags

Notice how the target needs to be declared in Package.swift:

.target(

name: "ConcurrencyExample",

dependencies: [],

swiftSettings: [

.unsafeFlags(["-Xfrontend", "-enable-experimental-concurrency"]),

]

)Until Swift concurrency features become stable and enabled by default you have to pass these flags when building. They are declared in the package manifest here so that you don’t have to pass them each time manually when building the project.

Imports

When using structured concurrency

to expose legacy callback-based APIs as async functions, you currently have to import the

_Concurrency module supplied with the latest Swift dev snapshots. The name of it starts with an

underscore to highlight that the module is experimental and its API may change.

Reviewing the code

Continuations

While most of Apple’s APIs will be converted to async/await

automatically,

your own callback-based code can be converted using a few helper functions, such as

withUnsafeContinuation and withUnsafeThrowingContinuation. They take a closure with a single

continuation argument, and you’re supposed to call your callback-based code from this closure,

capture the continuation, and call resume on it when you’re finished. Here’s how the declarations look for

the non-throwing variant for more context:

struct UnsafeContinuation<T> {

func resume(returning: T)

}

func withUnsafeContinuation<T>(

operation: (UnsafeContinuation<T>) -> ()

) async -> TOne of the most simple but practical enough example of a non-throwing async call is sleep:

import _Concurrency

import Dispatch

func sleep(seconds: Int) async {

await withUnsafeContinuation { c in

DispatchQueue.global()

.asyncAfter(deadline: .now() + .seconds(seconds)) {

c.resume(returning: ())

}

}

}And here’s a throwing variant:

struct UnsafeThrowingContinuation<T, E: Error> {

func resume(returning: T)

func resume(throwing: E)

}

func withUnsafeThrowingContinuation<T>(

operation: (UnsafeThrowingContinuation<T, Error>) -> ()

) async throws -> TBased on that we can convert URLSession.dataTask to a throwing async function:

import Foundation

struct UnknownError: Error {}

func download(_ url: String) async throws -> Data {

print("fetching \(url)")

return try await withUnsafeThrowingContinuation { c in

let task = URLSession.shared.dataTask(with: URL(string: url)!) { data, _, error in

switch (data, error) {

case let (_, error?):

return c.resume(throwing: error)

case let (data?, _):

return c.resume(returning: data)

case (nil, nil):

c.resume(throwing: UnknownError())

}

}

task.resume()

}

}Here the continuation c argument of the closure passed to withUnsafeThrowingContinuation becomes

your “callback”. You have to call resume on it exactly once with either a success value or an

Error value. Multiple calls to resume on the same continuation are invalid, since

async functions can’t throw or return more than once. If you don’t ever call resume on the

continuation you’ll get a function that never returns or throws, which is just as wrong as throwing

or returning more than once.

The entry point

As you probably noticed, in previous examples we got async functions, but how do you actually call them from

non-async code? Currently, the runAsyncAndBlock function is an entry point you would use to

interact with an async function from your blocking synchronous code:

runAsyncAndBlock {

print("task started")

let data = try! await download("https://httpbin.org/uuid")

print(String(data: data, encoding: .utf8)!)

}

print("end of main")Note that in the current toolchain (DEVELOPMENT-SNAPSHOT-2021-01-12-a at the moment of writing)

runAsyncAndBlock does not take throwing closures as arguments, thus try! or do/catch blocks

are required to call a throwing async function such as download.

The print statements here are added so that you can observe the execution sequence and to make sure

that your async code is actually called.

Remember that you should not use runAsyncAndBlock in your GUI code running on the

main thread, as it will block the event loop from processing any input from your user. This will

result in your app being frozen until the async closure body has finished. In a CLI app that

doesn’t expect any input this is fine though. In GUI apps you’d either have to call runAsyncAndBlock

on a background thread that can be blocked, or use async handlers or actors to host your async code.

Given that proposals for async handlers and actors aren’t available yet, we’re leaving those

advanced topics out, and focus on a simple command-line app here.

Launching concurrent tasks with “async let”

Let’s say you need to fetch UUIDs for multiple entities, but you can only get a single one through

an asynchronous call. In some naive implementation you would await for async calls repeatedly

one by one until you fetch all of the data. This is highly inefficient if these calls are independent

and can all run simultaneously without disrupting each other.

You can launch multiple async tasks concurrently with async let declarations. Then if you need to

wait for all of them to complete, just use a single await marker on both of the values that

were declared with async let:

func childTasks() async throws -> String {

print("\(#function) started")

async let uuid1 = download("https://httpbin.org/uuid")

async let uuid2 = download("https://httpbin.org/uuid")

return try await """

ids fetched concurrently:

uuid1: \(String(data: uuid1, encoding: .utf8)!)

uuid2: \(String(data: uuid2, encoding: .utf8)!)

"""

}Here two separate uuid1 and uuid2 tasks are launched concurrently, and they don’t block each

other. The try await markers before print mean that print won’t evaluate before both of the

tasks have completed. Compare this with the old

Dispatch approach, where instead of async let you’d have to set up a

DispatchGroup instance, which

is much more cumbersome to work with.

Cancellation

Tasks launched with async let are called “child tasks”, and this is important when you think

about cancellation. async let forms an implicit task hierarchy which reflects call stacks of async

functions. In the code above await childTasks() launches one parent task, which then spawns two

more child tasks for download calls. When a parent task is cancelled, its child tasks are

cancelled too.

The most critical thing to highlight is that cancellation with Swift’s structured concurrency is

cooperative, i.e. tasks need to “cooperate” in an explicit way to get cancelled as early as

possible. This is done with checkCancellation() and

isCancelled() static functions declared on the Task type from the _Concurrency module:

extension Task {

/// Returns `true` if the task is cancelled, and should stop executing.

///

/// This function returns instantly and will never suspend.

static func isCancelled() async -> Bool

/// The default cancellation thrown when a task is cancelled.

///

/// This error is also thrown automatically by `Task.checkCancellation()`,

/// if the current task has been cancelled.

struct CancellationError: Error {

// no extra information, cancellation is intended to be light-weight

init() {}

}

/// Check if the task is cancelled and throw

/// a `CancellationError` if it was.

///

/// This function returns instantly and will never suspend.

static func checkCancellation() async throws

}Why do you need to call these functions instead of the concurrency runtime just cancelling tasks automatically? The runtime doesn’t know what your task is doing, it may need to save and close a file handle, save the state of an expensive computation, or do whatever cleanup is needed. Cancelling tasks preemptively would lead to much more complexity, while forcing tasks to account for possible cancellation at any arbitrary line of code.

A try await Task.checkCancellation() call executed within a task will throw

Task.CancellationError if this task was previously cancelled, meaning that everything that follows

it in the same function scope won’t be executed. If you actually have to do any meaningful cleanup,

add a defer block or use a non-throwing isCancelled() boolean check to manage this in even

more explicit way.

Task handles

How would you actually cancel a task? Remember we mentioned that async let creates an implicit

hierarchy of child tasks. There’s a way to manage it explicitly with detached tasks to which

you get a handle value as soon as you launch them. Such task has no implicit scope and is not

included in any task hierarchy, so you have to manage it yourself. How does the Task.Handle type

look like that we’re operating on here?

extension Task {

struct Handle<Success, Failure: Error>: Equatable, Hashable {

/// Retrieve the result produced the task, if is the normal return value, or

/// throws the error that completed the task with a thrown error.

func get() async throws -> Success

}

}

extension Task.Handle where Failure == Never {

/// Retrieve the result produced by a task that is known to never throw.

func get() async -> Success

}Importantly, task handles allow you to cancel a corresponding task:

extension Task.Handle {

/// Cancel the task referenced by this handle.

func cancel()

/// Determine whether the task was cancelled.

var isCancelled: Bool { get }

}And to launch one and get a handle you should use Task.runDetached:

extension Task {

/// Create a new, detached task that produces a value of type `T`.

@discardableResult

static func runDetached<T>(

priority: Priority = .default,

operation: @escaping () async -> T

) -> Handle<T>

/// Create a new, detached task that produces a value of type `T`

/// or throws an error.

@discardableResult

static func runDetached<T>(

priority: Priority = .default,

operation: @escaping () async throws -> T

) -> Handle<T, Error>

}Note that you can even give a priority to such task, but I consider that a more advanced topic to deserve its own separate article.

Cancellation is cooperative

Let’s see how it works in practice and add a cancellation check to childTasks:

func childTasks() async throws -> String {

print("\(#function) started")

async let uuid1 = download("https://httpbin.org/uuid")

async let uuid2 = download("https://httpbin.org/uuid")

try await Task.checkCancellation()

print("\(#function) is not cancelled yet")

return try await """

ids fetched concurrently:

uuid1: \(String(data: uuid1, encoding: .utf8)!)

uuid2: \(String(data: uuid2, encoding: .utf8)!)

"""

}The function looks almost the same as previously with just two lines added in the middle:

try await Task.checkCancellation() and a print immediately after that.

To make things more interesting let’s launch a task that sleeps for one second, then starts

childTasks(), and finally prints the result when that’s available:

// run this as a detached task and get a handle for it

let handle = Task.runDetached {

await sleep(seconds: 1)

try await print(childTasks())

}To see the effect of cancellation, in our example the detached task is cancelled immediately after it’s launched, errors from that are caught and printed:

// cancel the task immediately through the handle

handle.cancel()

do {

try await handle.get()

} catch {

if error is Task.CancellationError {

print("task cancelled")

} else {

print(error)

}

}Let’s build and run the final example with cancellation and observe its output in the terminal:

childTasks() started

fetching https://httpbin.org/uuid

fetching https://httpbin.org/uuid

task cancelled

end of mainHm, why does it print childTasks() started if we cancelled the task immediately? Remember that

tasks that you launch have to explicitly check for cancellation and react appropriately. The sleep

function does not check for anything, and the first cancellation check on the execution path happens

right after implicit uuid1 and uuid2 tasks are launched. But the result of these two downloads

is never printed, because the whole parent task doesn’t finish thanks to the cancellation check in

the middle.

Summary

In this example we learned how to set up a SwiftPM CLI project with the new experimental concurrency

features enabled. We had a look at the new _Concurrency module and new types introduced in it:

continuations and task handles. We learned how to call async functions from non-async code, how

to launch new concurrent tasks with async let and detached tasks with Task.runDetached. We also

had a look at cooperative cancellation and how to add support for it in your async code.

Concurrency in Swift is a big topic, and there’s a lot more we omitted here that deserves its own separate articles. I hope you liked this one, feel free to shoot a message on Twitter or Mastodon with your feedback!

If you enjoyed this article, please consider becoming a sponsor. My goal is to produce content like this and to work on open-source projects full time, every single contribution brings me closer to it! This also benefits you as a reader and as a user of my open-source projects, ensuring that my blog and projects are constantly maintained and improved.